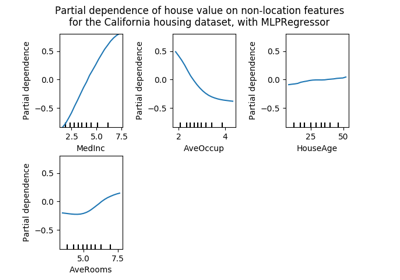

sklearn.inspection.partial_dependence¶

-

sklearn.inspection.partial_dependence(estimator, X, features, response_method='auto', percentiles=(0.05, 0.95), grid_resolution=100, method='auto')[source]¶ Partial dependence of

features.Partial dependence of a feature (or a set of features) corresponds to the average response of an estimator for each possible value of the feature.

Read more in the User Guide.

- Parameters

- estimatorBaseEstimator

A fitted estimator object implementing predict, predict_proba, or decision_function. Multioutput-multiclass classifiers are not supported.

- Xarray-like, shape (n_samples, n_features)

Xis used both to generate a grid of values for thefeatures, and to compute the averaged predictions when method is ‘brute’.- featureslist or array-like of int

The target features for which the partial dependency should be computed.

- response_method‘auto’, ‘predict_proba’ or ‘decision_function’, optional (default=’auto’)

Specifies whether to use predict_proba or decision_function as the target response. For regressors this parameter is ignored and the response is always the output of predict. By default, predict_proba is tried first and we revert to decision_function if it doesn’t exist. If

methodis ‘recursion’, the response is always the output of decision_function.- percentilestuple of float, optional (default=(0.05, 0.95))

The lower and upper percentile used to create the extreme values for the grid. Must be in [0, 1].

- grid_resolutionint, optional (default=100)

The number of equally spaced points on the grid, for each target feature.

- methodstr, optional (default=’auto’)

The method used to calculate the averaged predictions:

‘recursion’ is only supported for gradient boosting estimator (namely

GradientBoostingClassifier,GradientBoostingRegressor,HistGradientBoostingClassifier,HistGradientBoostingRegressor) but is more efficient in terms of speed. With this method,Xis only used to build the grid and the partial dependences are computed using the training data. This method does not account for theinitpredicor of the boosting process, which may lead to incorrect values (see warning below). With this method, the target response of a classifier is always the decision function, not the predicted probabilities.‘brute’ is supported for any estimator, but is more computationally intensive.

‘auto’:

‘recursion’ is used for

GradientBoostingClassifierandGradientBoostingRegressorifinit=None, and forHistGradientBoostingClassifierandHistGradientBoostingRegressor.‘brute’ is used for all other estimators.

- Returns

- averaged_predictionsndarray, shape (n_outputs, len(values[0]), len(values[1]), …)

The predictions for all the points in the grid, averaged over all samples in X (or over the training data if

methodis ‘recursion’).n_outputscorresponds to the number of classes in a multi-class setting, or to the number of tasks for multi-output regression. For classical regression and binary classificationn_outputs==1.n_values_feature_jcorresponds to the sizevalues[j].- valuesseq of 1d ndarrays

The values with which the grid has been created. The generated grid is a cartesian product of the arrays in

values.len(values) == len(features). The size of each arrayvalues[j]is eithergrid_resolution, or the number of unique values inX[:, j], whichever is smaller.

Warning

The ‘recursion’ method only works for gradient boosting estimators, and unlike the ‘brute’ method, it does not account for the

initpredictor of the boosting process. In practice this will produce the same values as ‘brute’ up to a constant offset in the target response, provided thatinitis a consant estimator (which is the default). However, as soon asinitis not a constant estimator, the partial dependence values are incorrect for ‘recursion’. This is not relevant forHistGradientBoostingClassifierandHistGradientBoostingRegressor, which do not have aninitparameter.See also

sklearn.inspection.plot_partial_dependencePlot partial dependence

Examples

>>> X = [[0, 0, 2], [1, 0, 0]] >>> y = [0, 1] >>> from sklearn.ensemble import GradientBoostingClassifier >>> gb = GradientBoostingClassifier(random_state=0).fit(X, y) >>> partial_dependence(gb, features=[0], X=X, percentiles=(0, 1), ... grid_resolution=2) (array([[-4.52..., 4.52...]]), [array([ 0., 1.])])